The Rising Threat of Software Supply Chain Attacks: Managing Dependencies of Open Source projects

Paolo Mainardi | 15 August 2023

Open source is flourishing like never before. It is estimated that at least 90% of companies use it, and based on a report by Synposys in 2022, 97% of commercial codebases utilize open source components.

Today, open source represents a significant competitive advantage and opportunity in all sectors where it is applied. It allows anyone to build products by assembling, remixing, and reusing existing modules, libraries, and tools instead of building everything from scratch. This means faster progress, accelerated time-to-market, and a more competitive industry space for everyone involved by adopting common standards.

As per a report, around 70% to 90% of a contemporary application "stack" comprises pre-existing OSS. Though it provides flexibility, it also comes with a cost.

Managing products consisting of numerous external dependencies across various layers, from the operating system to cloud containers and CI/CD tools, as well as private, public, or hybrid cloud solutions, can be overwhelming. Unfortunately, attackers find this field appealing as they can target multiple victims simultaneously, focusing on the supply chain instead of code vulnerabilities.

Attacks on Software Supply Chain Security are affecting the entire OSS ecosystem and becoming increasingly public and disruptive. Sonatype has maintained an updated timeline of Software Supply Chain attacks since 2017.

Sonatype, in their annual State of Software Supply Chain, shows how Supply Chain attacks have an average increase of 742% per year.

Source: https://www.sonatype.com/state-of-the-software-supply-chain/open-source-supply-demand-security

There are multiple factors contributing to this issue.

On the one hand, there has been a massive demand for open-source components like frameworks, libraries, and pre-trained AI models in recent years.

On the other, there have been new and evolving cyber-attacks targeting the supply chain, such as Dependency or Namespace Confusion, Typosquatting Malicious Code Injections, and the more recent Protestware attack.

Based on the 2020 Github Octoverse report, it was found that the average amount of indirect dependencies for a JavaScript project on GitHub is 683 for a project that uses an average number of 10 direct dependencies.

If you're not using automation to monitor the security risks from your dependency tree, chances are your project is vulnerable. Although these vulnerabilities may not be malicious, they can still allow malicious actors to target your users or their data.

Source: https://security.googleblog.com/2021/12/understanding-impact-of-apache-log4j.html

Dependencies remain one of the preferred mechanisms for creating and distributing malicious packages, and it is still relatively easy to use one of the already cited mechanisms to attack the supply chain from the dependency side, point D of the SLSA threat map, as shown in the figure.

Attribution: https://slsa.dev/

Crafting new malicious dependencies is still an easy task for attackers. Therefore, organizations are working to reduce the attack surface. For instance, in February 2022, GitHub introduced mandatory two-factor authentication for the top 100 npm maintainers. PyPA is working to reduce dependence on setup.py, a key element in launching these attacks.

Another two important milestones for the entire OSS ecosystem are the announcement of the native integration of GitHub and GitLab with the Sigstore infrastructure, allowing anyone to easily sign their artifacts and provenance attestations from their respective build systems, and recently the native NPM support to generate provenance artifacts, you can see here in action.

To see the numbers in action, we will test two of the most used programming languages in 2022, Javascript and Python, with two well-known web frameworks.

The test will include basic installation of the required packages and an OCI packaging of the codebase to simulate an artifact ready to be shipped in production.

Javascript

This scenario has been tested on vercel/next.js, one of the most popular open source projects by contributors in 2022.

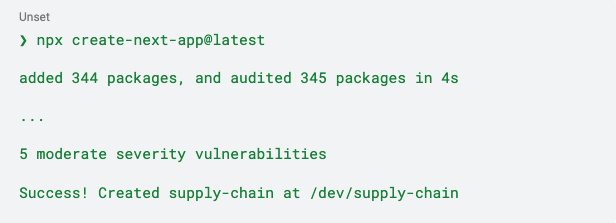

Step 1: Bootstrap the project with npm

One thing to note is that a newly installed app already has 5 moderate-severity vulnerabilities raised by NPM.

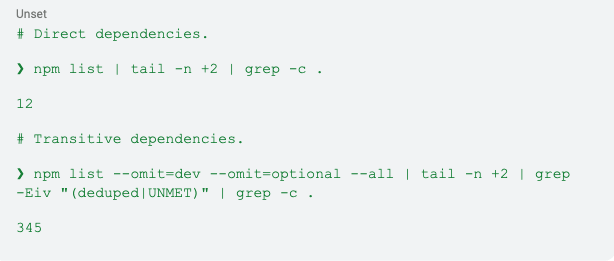

Step 2: Count direct and transitive dependencies

The data clearly shows that this software has 12 direct dependencies, but once installed, the total number of packages reaches 345 due to transitive dependencies installation.

The large package sizes in NodeJS are primarily due to the relatively small JavaScript standard library and the tendency to follow the Unix Philosophy. This philosophy inspired much of how NodeJS and its ecosystem were developed:

“Write modules that do one thing well. Write a new module rather than complicate an old one.”

The philosophy mentioned above can sometimes lead to distortions, such as creating micro-packages like left-pad. Left-pad was a JavaScript package consisting of only 11 lines of code, adding a specified amount of whitespace to the beginning of each string line.

Yet, despite its simplicity, a large percentage of the JavaScript ecosystem depends on this function – directly or, more commonly, indirectly.

As a result, a fraction of the JavaScript ecosystem and internet collapsed when the developer removed the package (in a way that couldn't happen anymore due to NPM changes). You can read the full story here and here.

Attribution: https://xkcd.com/2347/

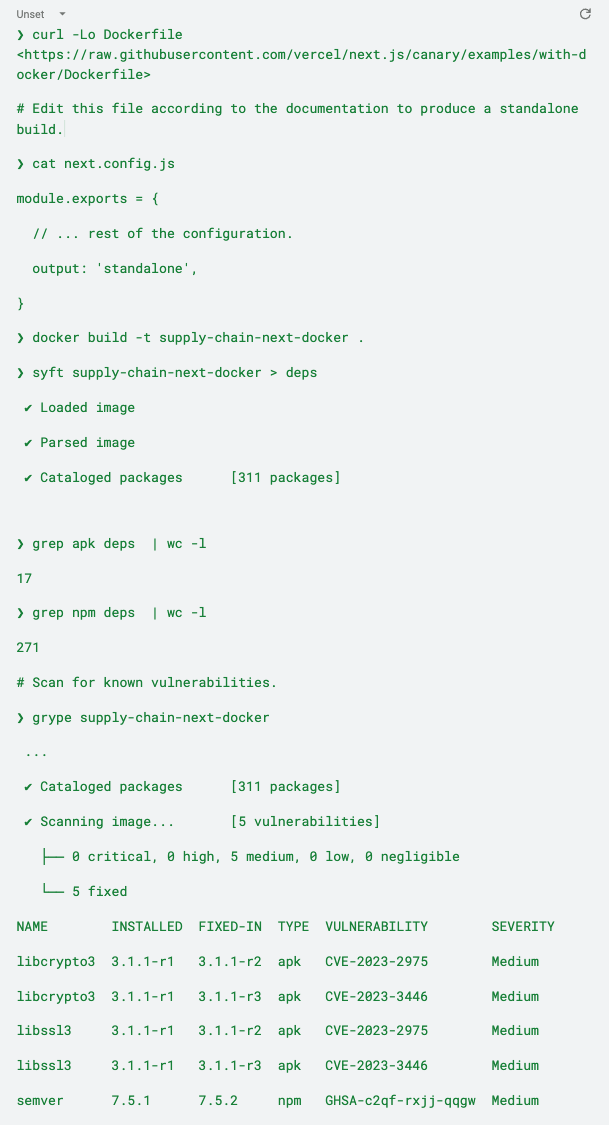

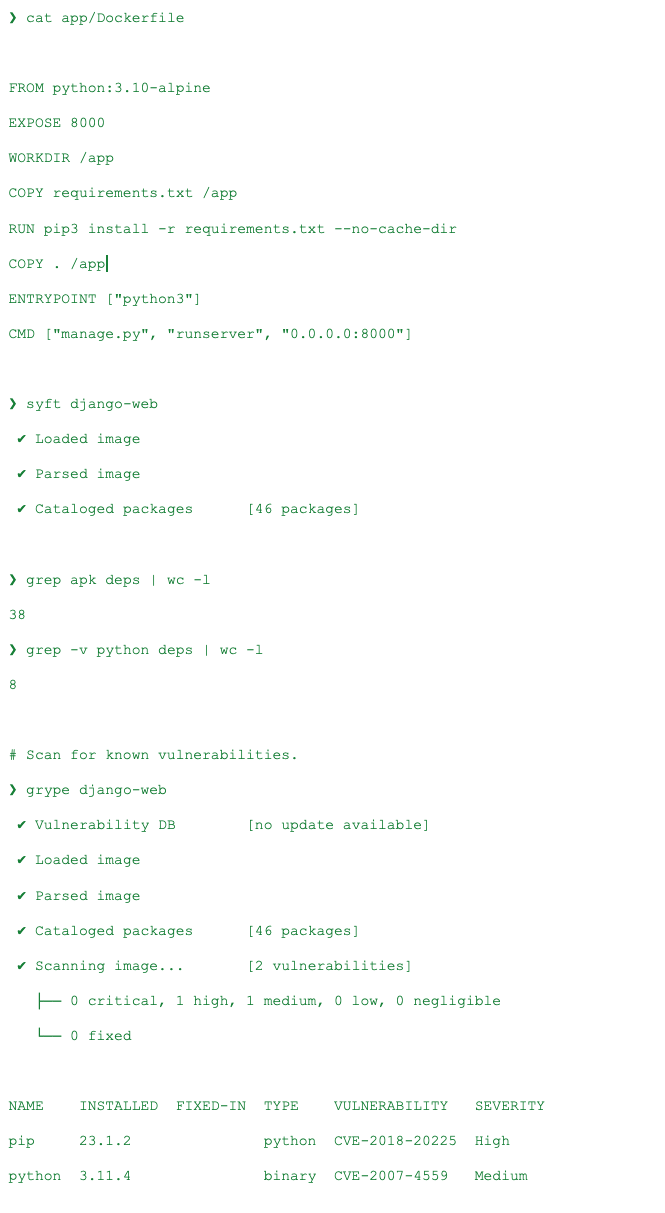

Step 3: Package the code in an OCI container and calculate packages and vulnerabilities with Syft and Grype

In this case, the build produced by NextJS is smaller in terms of packages because it uses standalone mode, as explained in the documentation. Additionally, the Alpine base image only adds 17 packages, with 311 packages and four known vulnerabilities detected by Grype.

A 2020 study found that the average JavaScript program relies on 377 packages, which is still more or less accurate according to our findings. Ten percent of JavaScript packages depend on over 1,400 third-party libraries.

Many of these dependencies are extremely small. For example, the isArray package, which is just one line of code, is downloaded (at the time of writing) over 84 million times per week.

Python

It is the second most used and the fastest growing language, with more than 22 percent year over year (source: https://github.blog/2023-03-02-why-python-keeps-growing-explained/).

To compare with JavaScript, we use the Django web framework as a test case, the most popular web framework in this ecosystem.

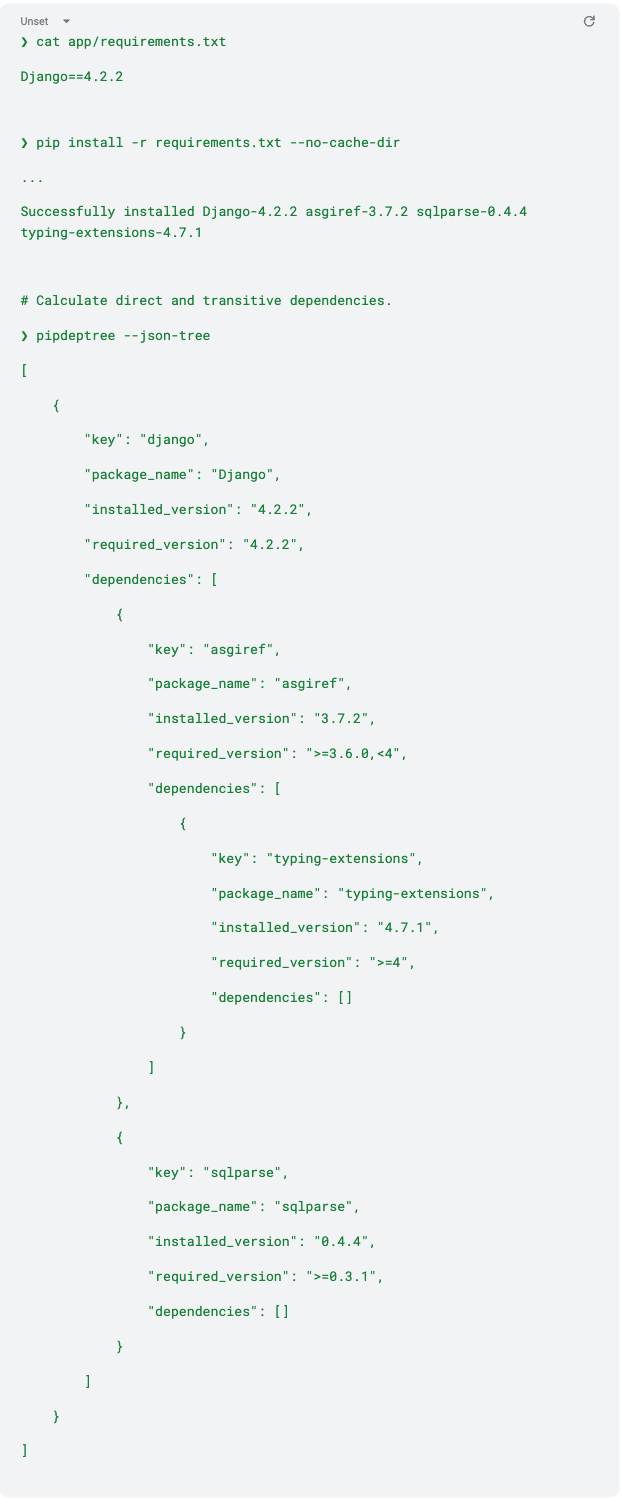

Step 1: Bootstrap the project with pip and count dependencies

It is important to note that, unlike NPM, PIP does not have a built-in mechanism to alert users about the presence of known vulnerabilities.

Here, the situation regarding dependencies is very different from JavaScript; the Django framework requires just two direct dependencies + 1 transitive dependency used by asgiref package.

Let's see how it behaves when packaged in an OCI container regarding required packages and known vulnerabilities.

Step 3: Package in an OCI container and scan with Grype and Syft

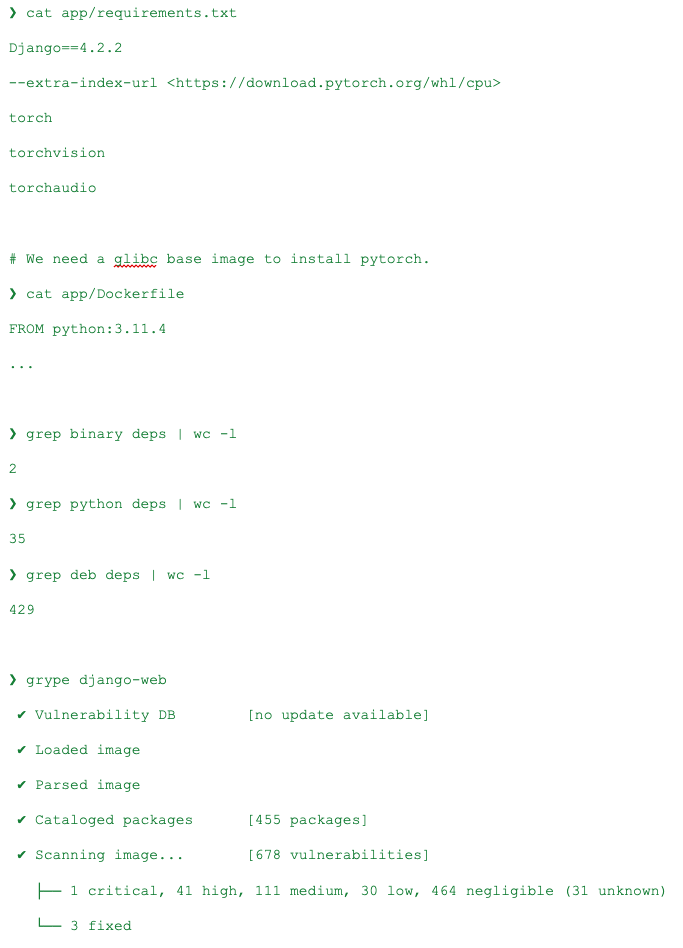

As Python is the best-in-class language for AI, I wanted to try to add PyTorch as a project dependency:

Despite PyTorch and its dependencies having a relatively small number of Python packages, only 35, the number of packages required to use PyTorch and carried over from the Debian base image is much larger at 429.

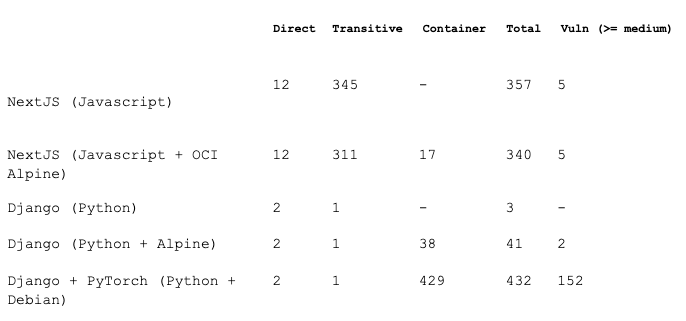

Test Results

We can see here the summarized results:

It comes as no surprise that the number of JavaScript packages is much larger than that of Python. This is due to the nature of JavaScript, which has small and vertical libraries that perform one specific task.

Another interesting piece of data, which may not come as a big surprise, too, is that containers based on pure Debian are large and have many known vulnerabilities, even if they are official, even tho reported vulnerabilities should be taken with a grain of salt until the Vulnerability-Exploitability eXchange (VEX) and corresponding OpenVEX spec are adopted and implemented by tools like Grype, used to in the test scenarios, which is an on-going effort.

You can learn more about VEX by visiting their website (https://www.ntia.gov/files/ntia/publications/vex_one-page_summary.pdf) and about the OpenVEX spec by visiting their GitHub page (https://github.com/openvex/spec).

Closing thoughts and takeaways

We are experiencing a golden age in computer programming, where we can utilize the vast knowledge amassed through the open source model created by individuals, communities, foundations, and projects.

On the one hand, powerful tools like package managers and containers have made it possible to assemble entire operating systems and applications of hundreds of thousands of external dependencies with just a few commands. On the other hand, this exposes us to a high level of complexity and risks that can be hidden by these tools, with the risk of embarking on vulnerable or malicious code. This causes stress for developers and poses significant risks for the entire industry, and in some cases, it can even endanger national security, as demonstrated by the Solarwinds case.

That's why national governments have started to promulgate new laws and strategies specifically designed to address cybersecurity risks and mitigate software supply chain attacks. Examples include the US National Cybersecurity Strategy (https://www.whitehouse.gov/wp-content/uploads/2023/03/National-Cybersecurity-Strategy-2023.pdf) and the EU Cyber Resiliency Act (https://digital-strategy.ec.europa.eu/en/library/cyber-resilience-act).

However, the latter is causing major concern among OSS communities. There is broad consensus that how the Act is drafted inadvertently risks imposing a major burden on open-source contributors and non-profit foundations. You can find more information on this issue at https://linuxfoundation.eu/cyber-resilience-act.

In 1984, Ken Thompson asked in the famous paper "Reflections on Trusting Trust" how we can ever trust code that we haven't written ourselves, and the morale was:

“You can’t trust code that you did not totally create yourself. No amount of source-level verification or scrutiny will protect from using trusted code. To what extent should one trust a statement that a program is free of Trojan horses? Perhaps it is more important to trust the people who wrote the software.”In the past, meeting the developers who created and used third-party libraries may have been possible. However, this is no longer feasible today due to the increased number of actors involved. Additionally, Protestware Attacks can pose a significant threat that is not easily countered.

Fortunately, the open-source software industry has responded quickly and strongly to the rapid advance of supply chain-type attacks; let’s see how:

- A new foundation, OpenSSF, has been created to lead the effort for the security of the OSS ecosystem, fostering collaboration and working both upstream and with existing communities to advance open-source security for all, with projects like Alpha-Omega, Sigstore, and new dedicated events and co-located one at the main OSS conferences.

- A new framework has been created, Supply-chain Levels for Software Artifacts, or SLSA ("salsa"), which is “a security framework, a checklist of standards and controls to prevent tampering, improve integrity, and secure packages and infrastructure. It’s how you get from "safe enough" to being as resilient as possible, at any link in the chain.”

- New or existing standards have been created and improved to produce a Software Bill of Materials (SBOM) and build attestations to guarantee how the artifacts are composed and their provenance; we are talking about SPDX, CycloneDX, and in-toto attestations.

- A new handbook dedicated to developers by CNCF TAG Security: “The Secure Software Factory - A reference architecture to securing the software supply chain” and two concise guides for developers and companies to adopt or release new open source software from the WG Best practices of OpenSSF.

Numerous new initiatives are emerging and will continue to do so in the future. Though software composition is now more complex than in the past, and we face evolving risks, we can still rely on the power of open-source communities. These communities are made up of people, companies, public sectors, and foundations working together to promote sustainability and evolution in the entire industry at the appropriate pace.

This article is a reprise of the one I wrote for my personal blog: https://www.paolomainardi.com/posts/point-of-no-return-on-managing-software-dependencies/